Best Practices for Seabed Surveys

Surveying is expensive and complex, often costing in excess of 100,000 € per day, and the requirements - and constraints - of every survey are...

The recent Hydro International article “The Pressure Beneath the Surface” captures a reality many subsea and hydrographic professionals know all too well: the pressure is no longer just physical; it is digital. Increasing survey frequency, higher-resolution sensors, expanding offshore assets, and tighter delivery timelines generate tremendous data pressure and are pushing existing data workflows to their limits.

What stands out in the article is not the novelty of the challenges but the familiarity across offshore wind, subsea cables, marine infrastructure, and defense. The industry is not struggling to collect data. It is struggling to cope with it.

This is not a futuristic outlook; it is today’s reality for many subsea and hydrographic professionals. And importantly, solutions to these challenges already exist.

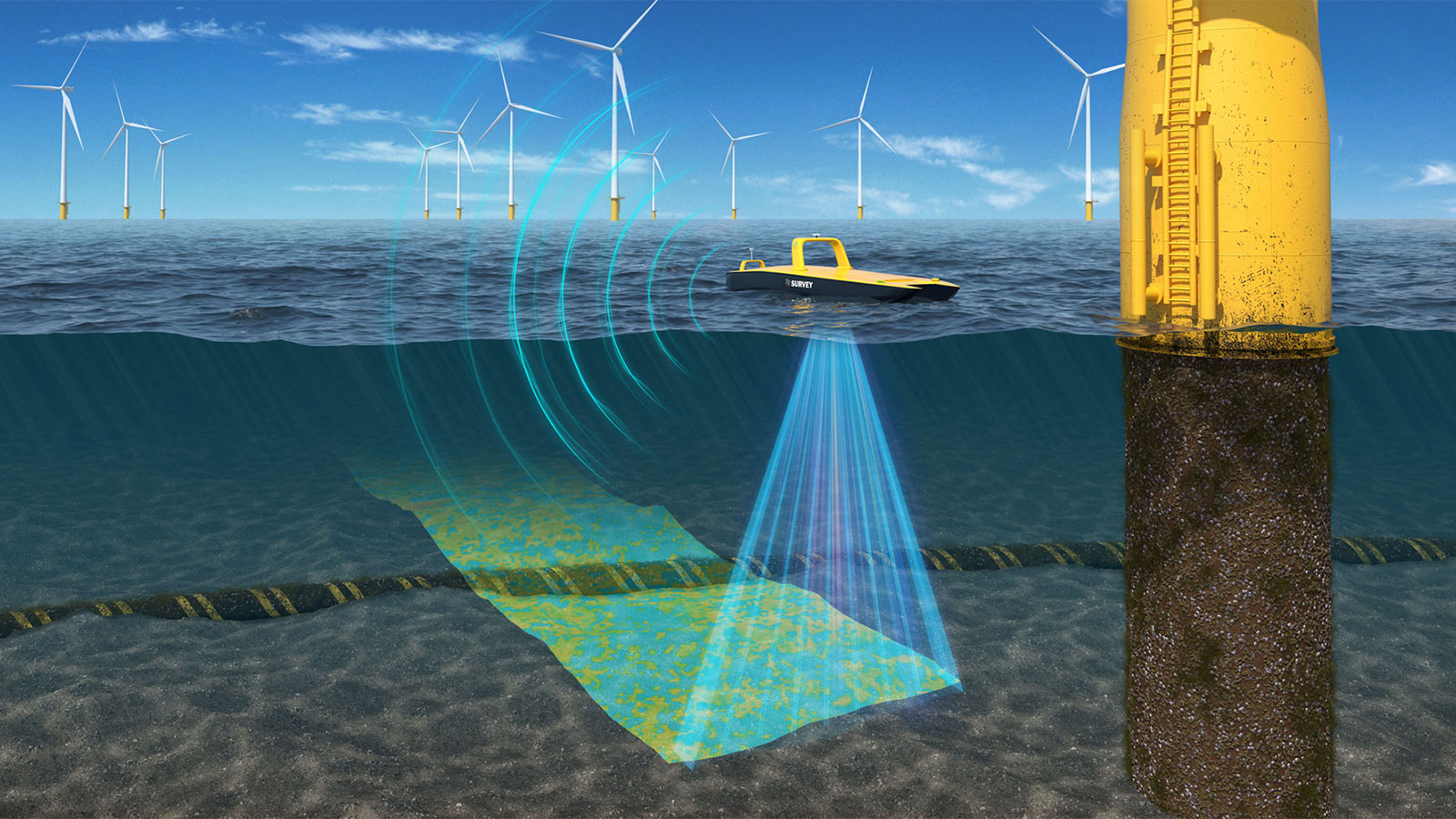

Modern subsea operations depend on acoustic sensors to make the invisible visible. Multibeam echosounders, side-scan sonars, sub-bottom profilers, and related systems generate extraordinarily detailed representations of the seabed and subsurface. Each survey delivers immense value but also terabytes of complex, unstructured raw data.

Hydro International rightly highlights several pressure points:

These pressures are not isolated technical issues. They are systemic. And they compound as projects grow in size, regulatory scrutiny increases, and margins tighten.

In many organizations, highly qualified geophysicists and hydrographers spend a significant portion of their time simply finding, moving, opening, and checking data; rather than interpreting it. This is not a skills problem. It is a data management and architecture problem.

What we describe as Ocean Big Data reflects a fundamental change in how subsea information is generated and used. Raw underwater sensor data today clearly meets all three dimensions of big data:

Yet much of the industry still relies on software paradigms and workflows developed in the 1990s: when datasets were smaller, surveys were less frequent, and collaboration was local.

The consequence is friction everywhere: duplicated data, manual handovers, sample-based quality control, and long feedback loops between survey, engineering, and decision-making teams.

This is precisely where the pressure described in Hydro International originates.

What the Hydro International article hints at are emerging themes. Actually, the technological shift is already happening beneath the surface of daily operations.

Across the industry, solution providers are moving away from:

And toward:

All this aims to support expert judgment and to remove the friction that prevents experts from applying their knowledge and expertise where it matters most.

When raw subsea data becomes centrally accessible, spatially indexed, and technically validated upon ingestion, several things change immediately:

None of this requires futuristic technology. It requires applying proven big data principles (already standard in other industries) to the realities of subsea operations.

One of the most critical data pressures highlighted by the industry today is quality control (QC). With data volumes growing faster than teams, QC often becomes selective, manual, and time-constrained.

This creates an uncomfortable gap between the cost of data acquisition and the confidence in its usability.

Modern approaches are closing this gap by combining:

The result is not just faster QC but trust in the data and return on investment for large survey contracts.

The pressure beneath the surface is real. However, it can be turned into an operational opportunity.

We are witnessing a transition phase: one where subsea and offshore industries are recognizing that prevalent data infrastructures are no longer able to cope with the growing data volumes.

Solutions already exist that address the bottlenecks described by Hydro International. They are not silver bullets, and they are not about removing human expertise. They are about enabling it to scale.

Ocean Big Data is not simply the byproduct of offshore operations. It is the digital foundation that allows those operations to remain safe, efficient, and economically viable in an increasingly complex marine environment.

The organizations that recognize this shift early will not just relieve pressure; they will turn it into a competitive advantage.

Surveying is expensive and complex, often costing in excess of 100,000 € per day, and the requirements - and constraints - of every survey are...

In our pursuit of climate protection and renewable energy, offshore wind farms have emerged as vital components in global decarbonization efforts....

When NATO gathered thousands of participants for REPMUS & Dynamic Messenger 2025 off the Portuguese coast, the focus was clear: testing how...

The Problem: Inefficiencies in Line Planning Are Costing You In offshore surveys, time really is money. Vessel operation costs can easily exceed...

.png)